MVP to Production AI

Optimize and Scale Your AI Agent's Tool Calling

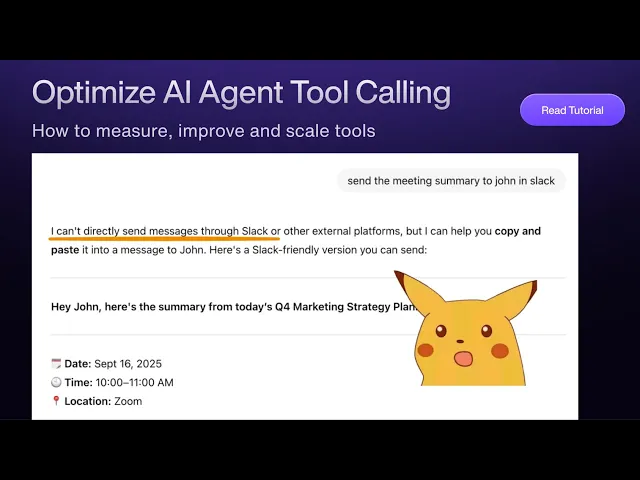

Improve your AI agent's tool calling. We go over how to measure performance, how to improve, and how to scale the number of tools for your agent.

You have an AI agent that can call tools to search the web and call APIs. Your team rejoices! Hold on… Why did the agent call the tool successfully here and not there?

This universal experience (aka frustration) we’ve all had building and using AI agents naturally leads us down the path of improving tool calling.

In this deep dive, we’ll go over:

What it means to be “good” at tool calling

How to improve tool calling

Why agents struggle with tools at scale

How to implement tools at scale

Video Walkthrough

What it means to be “good” at tool calling

Reviewing AI responses can be like reviewing a movie. Whether or not you liked a movie is partly objective, but also part subjective taste. Evaluating tool calling is no different. That being said, there are two main metrics to measure tool performance:

Tool correctness

Task completion.

Tool Correctness

Tool Correctness measures if the agent selected the right tool/set of tools using an objective measure of if a tool was called or not called (1). It’s not subjective. It’s a straightforward statistic.

Here’s an example. A Cursor user building a web UI asks for Cursor to make their sidebar collapsible. The Cursor development team may define the expected tools for this task to be the READ CODE tool, EDIT CODE tool and the LINT tool.

Task Completion

Task completion assesses end-to-end success on the prompted task. An LLM judge (while a bit subjective) provides the score that quantifies to what extent the job was completed using tools. Here is DeepEval’s methodology for task completion (1).

In the Cursor example:

In summary, tool correctness is a proxy for “the agent is calling the right tools.” Task completion is a proxy for “the agent is using tools correctly to answer the user’s prompt.“ Both are necessary for a production-level agent. Let’s talk about how to improve your tool correctness and task completion scores.

How to improve tool calling

The main settings that affect tool calling performance are:

LLM choice

System prompts

Number of tools to choose from

Tool description quality

We ran evaluations on a series of test cases where we varied each setting across 50 test cases (2). A majority (36/50) of these test cases were defined to test just one specific tool. Fourteen of our test cases involved chaining multiple tools. Here are a few examples:

High-level takeaways

The takeaways from our tests reveal that LLM choice has the largest impact on tool calling performance. This makes sense, as model providers like OpenAI and Anthropic have set their sights on building better models for tool calling.

The other settings saw either negligible or mixed effects on tool performance.

In the weeds

You may be thinking, “Wow, there’s not much I can do besides pick the frontier models.”

While that’s partly true, looking at the data closer, we can see that prompts, descriptions, and number of tools do have a meaningful impact on complex tasks.

Evaluating gpt-4o, we see this impact on complex task performance.

System Prompt | Tool Correctness | Task Completion |

|---|---|---|

Base | 44.1% | 37.5% |

Light | 51.2% | 42.9% |

Descriptive | 51.2% | 53.9% |

Description | Tool Correctness | Task Completion |

|---|---|---|

regular-detailed | 44.1% | 37.5% |

extra-detailed | 50% | 50% |

Not every setting will affect every model the same way. When we reference that varying number of tools is “beneficial for certain LLMs,” we can see that routing to sub-agents that have a smaller subset of tools positively impacts 3.5-Sonnet and not GPT-4o.

GPT-4o results:

Routing | Tool Correctness | Task Completion |

|---|---|---|

Routing | 73.3% | 55.8% |

No-Routing | 74.8% | 53.0% |

3.5-Sonnet results:

Routing | Tool Correctness | Task Completion |

|---|---|---|

Routing | 75.8% | 60.3% |

No-Routing | 67.6% | 65% |

In summary, using the newest frontier models is the best way to optimize tool calling. That part is more or less “science.” The “art” aspect of optimizing tool performance is fine-tuning for tool correctness and task completion as there is no “one” setting - “one prompt” or “one right number of tools” - that will optimize every model.

While there’s an evaluation method and a trial-and-error side of implementing tools, there’s also an engineering side that’s more cut-and-dry. Here are the engineering challenges that arise from scaling your agent’s tools.

Why agents struggle with tools at scale

It’s easy to forget that tools are still just tokens at the end of the day. LLMs decide when to call a tool based off the tool name, tool description, input names, and input descriptions. The code that makes up the tool/function call is run in your backend (NOT ran by the model provider). The result of the code is returned to the LLM.

The tool calling process means that:

Tool descriptions eat up tokens in the context window

Two round trips are required - one for the initial tool call intent and another for the tool call results

As you provide your agent with more and more capabilities in the form of tools, you must also reconcile with tools eating up your context window and breakdowns from too many tools.

This may be a bit extreme, as your product may not need more than 80 tools. However, filtering and tool selection will have performance and cost benefits. Let’s talk about how to engineer an agent system that can handle an increasing array of tools.

How to implement tools at scale

If tools eat up tokens and hog the context window, the fix is to load only the relevant tools. Here are a few designs that work:

Let users decide

How many times has one of your users said, “Whoa I didn’t know the product could do that!” You’ve probably experienced it yourself when you find a new iPhone setting or keyboard hotkey.

Letting users decide what tools to give your agent not only limits the number of tools, but also makes it apparent to your users what tools are available.

Using an example where an agent is using ActionKit to provide tools for different integration providers:

Multi-agent pattern

If you want your agent to decide on the right subset of tools needed to complete the user’s prompt, patterns like routing and orchestration can filter tools for your agent (3).

In the planner-worker implementation, a “planner” agent creates a plan on what integrations are necessary for the task. For example, if a user asks for their email inbox, the planner agent will respond with ['gmail'] . If a user asks about Salesforce and Gmail, the planner agent will respond with ['salesforce', 'gmail'] .

Based off the plan with a list of integrations, ActionKit builds tools dynamically - providing the right descriptions and input schema for different actions across an integration like Gmail.

A worker agent then uses only the integration-specific tools in its request to the OpenAI API.

End-to-end, the agent system loads only relevant tools based off the user prompt.

MCP provided tools

MCPs are similar to multi-agent patterns. Rather than a “planner” agent that lives in your application backend, an MCP server can decide on tools and dynamically provide them to your agent.

An important distinction here: MCP servers do NOT inherently provide tool selection out-of-the-box. The Model Context Protocol is a standard for providing your agent with prompts, sampling, and tools. MCP servers can help select tools, but the standard does not require an MCP server to.

In summary, don’t rely on 3rd-party MCP servers to solve tool selection for your agent. You can build your own MCP server that handles tool selection, or opt for an agent pattern like worker-planner to handle the MCP-provided tools.

Using Paragon’s ActionKit MCP server as an example, we used the same multi-agent pattern to plan and select tools, but what the ActionKit MCP server can uniquely do is provide server-specific context, such as magic links that authenticate users directly in the chat.

Check out the ActionKit MCP server to try out different 3rd-party integration tools right in your Cursor, Claude, or very own MCP client.

Wrapping Up

In this deep dive we went over:

Tool correctness and task completion as performance metrics

LLM choice as the #1 variable for improving performance, with additional fine-tuning improvements with prompts, descriptions, and routing

Context-window issues as tools scale

Patterns for dynamic tool loading

Tools present interesting challenges for AI product builders. Solving for these challenges is worth it, as tools equip agents with ways to interact with the outside world.

If you’d like to see how Paragon can help equip your AI product with integrations in your users’ world - like their Google Drive, Slack, Salesforce, and more - check out our use case page and book some time with our team.

Hungry for more AI deep dives and tutorials? Visit our learn page for more content like this!

Sources

DeepEval LLM Evaluation Metrics - https://www.confident-ai.com/blog/llm-evaluation-metrics-everything-you-need-for-llm-evaluation

Paragon’s Guide to Optimizing Tool Calling - https://www.useparagon.com/learn/rag-best-practices-optimizing-tool-calling/

Vercel AI SDK’s Agent Guide - https://ai-sdk.dev/docs/foundations/agents#orchestrator-worker

CHAPTERS

TABLE OF CONTENTS

Table of contents will appear here.

Jack Mu

,

Devloper Advocate

mins to read