MVP to Production AI

Permissions & Access Control for Production RAG Apps

A deep dive into permissions for RAG - challenges, strategies, and an end-to-end implementation

Why Permissions are Important for RAG?

Perhaps the biggest separators between a “cool MVP chatbot with RAG” and a production-grade RAG application is permissions. When building for customers and enterprises, it’s not enough to be performant. Permissions are table-stakes.

Taking your application from cool MVP to production-grade means that your RAG application needs to support multi-tenancy and control access to sensitive data. In this article, we’ll:

Go over the problem space: why RAG permissions can get complicated when layering in external context

Walk through a few different permissions protocols and the tradeoffs for each

Implement a permissions system that’s production-ready using our recommended permissions protocol

Author’s Note: We provided a lengthy explanation of the problem space and different permissions protocols. If you’d like to skim and go straight to the tutorial implementation, our recommendation is to use a permissions graph. Our implementation will be using this protocol with batch checking.

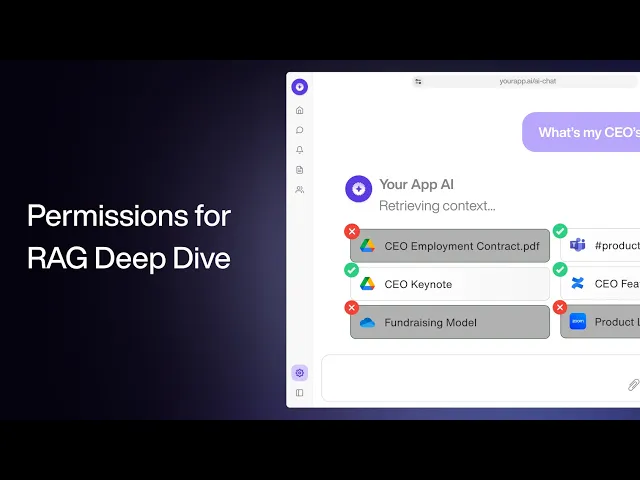

Video Explainer

Watch our video deep dive or read our full write-up below!

Challenges of external context

Permissions is not a new problem for product builders. However, AI applications are racing to become more integrated with their users’ workflows, permissions has become more complex.

A quick example: Cursor - the AI code editor - has built integrations with Slack and Github, bringing Cursor features to the platforms their users are in. We can imagine a world where Cursor builds even more integrations: Jira, Confluence, Linear, and other platforms where engineers work. These integrations would allow Cursor to understand their users’ work better (RAG) and perform work in those platforms (tool calling).

Integrations sound great for AI apps! Hold on though. If your RAG application has ingested your users’ context from all of these 3rd-party integrations, how do you manage permissions to that 3rd-party data?

Should we avoid storing data and consult the 3rd-party provider’s API on every retrieval?

Or should we store the 3rd-party permissions for every ingested data artifact?

And if you’re building for teams and enterprises, usually company admins are authorizing their 3rd-party data to SaaS applications.

How do you handle permissions for individual end-users when data access is authorized at an organization level?

These are the challenges of RAG applications with external context. So now onto the solutions - let’s go into the different permissions protocols that solve for these problems.

Different Permissions Protocols

There’s no one-size-fits-all for every RAG application. That’s why we’ll be introducing 4 main protocols and explaining when each method shines.

RAG queries with tool calling

Data ingestion with separate namespaces

Data ingestion with ACL (access control list) table

Data ingestion with ReBAC (relationship-based access control) permissions graph

RAG Queries with Tool Calling

If you’re not familiar with agent tool calling, tools provide an AI application with code that it can run. You provide:

a description of when the tool should be used

the inputs for what parameters the code needs

the actual code that gets run

The code that runs in a tool call can be anything. For RAG use cases, the tool call can involve using an API call to an integration-provider.

Using Notion as an example, your RAG application can call the Notion GET contents API to retrieve context from Notion at prompt-time using your users’ Notion OAuth credentials. Because your RAG application will always be going straight to the Notion API using your users’ Notion credentials, your RAG application will never be able to query data that your users don’t have access to.

Tool calling is one of the safest ways to query data from integration providers while respecting permissions. There’s no storage of external data and your application will always use your users’ credentials to query from the 3rd-party data source on their behalf. However, the tool calling approach comes with tradeoffs, occurring when:

The integration-provider’s API is not optimal for querying/searching

Not every 3rd-party API is optimized for search and querying. For example, Salesforce provides a SQL-like API endpoint, which is very effective for querying for RAG.

On the other hand, Google Drive doesn't provide any API endpoints for searching for content across files, making it impossible to run a semantic search query across a user's Google Drive directory.

Tool calling performance is not up-to-par

Tool calling can be unreliable as it relies on the LLM’s ability to choose the right tool and input the right parameters. This can actually be an extremely brittle process.

Going back to the Salesforce SQL API example, the

parameter can be easily malformed or of the wrong data type. Even if the LLM retries the tool multiple times to get a successful tool call, this adds latency to a RAG response.

In contrast, RAG with data ingestion to a vector database with query-time retrieval is generally more reliable. Vector search is more resilient to typos and malformed inputs as your users’ queries are transformed into vectors and undergo similarity searching.

Check out this research article on tool calling versus vector search for RAG for a deeper dive.

Multiple integration-provider APIs must be called

If your RAG application involves just one or two integrations, tool calling can be viable for your RAG application (assuming that the integration-provider’s API is suitable and tool calling performance is optimized). However, this protocol isn’t scalable if your RAG application needs to aggregate and synthesize data from multiple sources.

Imagine if your RAG application had 4 integrations - that would result in your agent having to call 4 APIs per user prompt.

As tool calling has it’s share of tradeoffs for RAG applications with multiple integrations, the next 3 approaches center around data ingestion into a vector database for vector-search-based RAG retrieval. While installing the infrastructure for data ingestion is indeed more involved, it provides key benefits in performance and flexibility for RAG applications.

Data Ingestion with Separate Namespaces

The second protocol for permissions involves data ingestion to a vector database. Many vector databases like Pinecone and AstraDB have namespaces - partitions to keep data separate between multiple-tenants using the same database.

In practice, this can look like a separate namespace per user or per organization.

When ingesting your users’ 3rd-party data, you can store all the vector embeddings in that users’ namespace. When that user prompts your RAG application, your app can restrict RAG retrieval from only within their namespace, ensuring proper access control to data.

Where this is really powerful is for use cases where each of your users have their own unique data that isn’t shared with other users of your application. For example, in your RAG application, you can allow users to upload PDF documents and then allow your AI application to retrieve context from those documents. Separate namespaces work great here as local PDF documents from your users' computers don’t have any inherent permissions - the users who uploaded the file is the only one that needs permissions to it.

However, what if your RAG application has access to a PDF shared by multiple users across your customer's Google Drive workspace? Google Drive files do have inherent permissions, with lists of users and teams with read access. If your customers are teams and enterprises vs. individual 'consumer' users, a company admin (not individual employees) will likely set up the integrations on behalf of their company, and allow your RAG application to ingest their company’s Google Drive, Sharepoint, Box, etc. In these scenarios, Separate namespaces becomes more complicated now, with a few major downsides.

Massive amounts of data replication

When using separate namespaces for permissions, each end-user needs their own namespace. If an enterprise customer has 100 employees, that means 100 separate namespaces.

As mentioned, this looks OK if each end-user has access to files that are unique to them. But think of all the shared files, like Company Vacation Policy.pdf that every employee has access to. With the separate namespace strategy, you would need this file to be replicated in each employee’s namespace.

For serving larger organizations, this isn’t scalable nor cost-friendly in database storage costs.

Massive amounts of data operations

With massive amounts of data replication, data operations can get out of hand. Permissions data needs to be created, updated, and deleted, as external data isn’t static - Sharepoint files are created, updated, shared with more people. In the namespace implementation where your customer has 100 employees, a single update to one Google Drive file would require updating that data across 100 namespaces.

Data Ingestion with ACL Table

Unlike the last two protocols, data ingestion with an ACL (Access Control List) database involves a separate database from the vector database for storing native data source permissions. Generally ACLs utilize a relational database and can be as simple as a single table

or involve more complex modeling with multiple entity and relationship tables.

Whether you opt for a simpler data model or a more complex one for your ACL, here are the universal steps for implementing permissions ACLs:

Data ingestion as usual

Unlike the previous namespaces approach, you don’t need a separate namespace per end-user. Even with enterprise customers, when an admin enables data ingestion from their organization's file storage or CRM systems, your RAG application can put the chunked vector embeddings in a single database/namespace, simplifying the data ingestion process.

Permissions ingestion

Where this permissions protocol differs from querying with tool calls and separate namespaces is that you would need a separate database for indexing and storing permissions. Just as integration providers provide APIs for pulling data, they’ll generally also provide an API to pull permissions.

With this protocol, whenever your RAG application retrieves context from your vector database, your application will also check the ACL tables with the native integration provider permissions to check if the authenticated user has access to the retrieved data source in question. This ensures the correct permissions are always enforced with each RAG query.

The main tradeoff of storing permissions in an ACL is that you are now responsible for making sure your permissions data is always up-to-date. Here are a few considerations for updating permissions data:

Permissions Change Infrastructure

To keep our permissions ACL up-to-date, you will need to build services to either poll for updates or listen for webhooks. Both are viable options and will depend on your business requirements for data freshness and the integration provider's API. Google Drive's API supports webhooks for file changes; Dropbox supports a /list_folder/get_latest_cursor for long polling changes.

ACL Modeling

ACL tables are generally relational databases and therefore have a few different implementations with different read/write advantages. The simplest implementation is a table where each file/object has a list of allowed users. This means permissions are "flattened" where complicated permissions like "user:jack -> team:marketing -> folder:marketing-assets -> file:ad-creative" are flattened to file:ad-creative is accessible by user:jack

This flattened model is extremely read-efficient when your RAG application needs to check permissions at query-time as it requires no table joins to check for access to parent folders/objects.

But this model is extremely write-inefficient. Take for example a Sharepoint permissions change to a folder where a user has their access revoked. Your RAG application would need to perform a graph traversal for every file and child folder, updating multiple rows in your ACL table.

A different ACL model is using different tables to track relationships rather than "flattening" hierarchical structures. For example, you could have a users table, teams table, folders table, file table, and separate tables tracking relationships - users_teams table, users_folders table, users_file table, etc. With this ACL model, writes are efficient. In our example with the Sharepoint folder permissions change, rather than have multiple rows updated, we only need to update a single row in the users_folder table.

Where this relationship-based model falls short is on reads. The more relationships there are, the more joins are needed whenever your RAG application queries the permissions ACL table.

With these heavy tradeoffs no matter the data model, this brings us to our last permissions protocol where we consider forgoing a traditional relational database for a graph database.

Data Ingestion with ReBAC Permissions Graph

The last permissions protocol involves using a ReBAC Permissions Graph. Similar to data ingestion with an ACL table, we are storing permissions in a database, but opting for a ReBAC permissions graph over a relational database. If you’re not familiar with ReBAC graphs, let’s break it down.

ReBAC stands for “Relationship Based Access Control.” Unlike using roles, attributes, or ACLs to propagate permissions, ReBAC defines relationships between users, teams, collections (folders), and objects (files). ReBAC has been proven to work across different different data sources, as seen in Google’s implementation of Zanzibar, Google’s global authorization system that they use across their products.

Similar to the ACL protocol described above, Data ingestion, Permissions Indexing, and Permissions Changes Infrastructure are requirements for this protocol. Where graph databases differs from the ACL protocol is that graph databases are optimized for relationship reads and writes.

Whereas a relational database requires multiple joins to track relationships between users, teams, folders, and files, a graph database’s native queries are graph algorithms that can efficiently identify relationships like user:jack have relationship:can_read to [file:ad-assets](file:ad-assets) . Not only are reads efficient, there is no tradeoff with writes. Changes to relationships like revoking access to a folder or a user leaving a team is as easy as deleting an “edge” (relationship) between two “nodes” in the graph.

For example, if a user loses membership to a team with permissions to certain folders and files. Revoking permissions in a graph is as simple as deleting an edge between a user node and team node, rather than modifying multiple rows in an ACL table.

In addition to optimized reads and writes, graph databases also benefit in its flexibility. In its graph schema, your application developers can define as many types of entities and relationships as needed. This flexibility is useful when storing different integration provider permissions in a single database. For your RAG application, you can index Google Drive, Sharepoint, Hubspot, Salesforce, and Gong all in the same graph. All providers can share node types like users and teams ; Google Drive and Sharepoint can have unique node and relationship types they share like files and folders; Hubspot and Salesforce can use their own node types like Contacts and Deals .

Across both performance and flexibility, graph databases are the best choice for indexing and maintaining permissions. However, because graph databases have traditionally not seen wide adoption, there may be a learning curve for your team when it comes to modeling your graph for permissions. Here are a few nuances and extensions to think about when modeling a permissions graph:

Different permission types should use different edge types (i.e. can_read, can_write, owner)

RBAC and ReBAC can be used together where roles are an entity in the graph

Cascading relations allow permissions to be propagated (i.e. propagate permissions of a folder to its child files/folders)

Logical operators like

union,intersection, andexclusionto model interactions like blocklistsWildcards can be used on user types to model public access

Implementing Access Control Through the Graph Approach

Let’s revisit the enterprise RAG app we built in our previous chapter, YourApp.ai. We built an AI application with connectors to ingest file storage and CRM data and enforce permissions on RAG retrieval.

What we didn’t go into in our last tutorial was that we actually implemented permissions with a permissions graph protocol!

In the previous sections of this chapter, we covered multiple approaches because we wanted to provide context on all the optionality that your team has for developing a permissions system for RAG. That being said, the protocol we recommend for most use cases and the one we implemented for our tutorial in the last chapter is data ingestion with a ReBAC permissions graph.

To compare side-by-side, the data ingestion with permissions graph protocol

provides better RAG performance than the 3rd-party API tool calling protocol

is more memory and cost efficient than the separate namespace protocol

is more performant and flexible than the ACL protocol

Let’s explore the different ways to implement access control with a permissions graph.

Pre vs Post Retrieval: When to enforce access control

A permissions graph is purely a database. It doesn’t actually enforce permissions inherently. What the graph provides is the ability to read/query permissions efficiently.

Graph databases allow you to efficiently perform 3 types of read operations:

Finding what objects a user has access to

Finding what users have access to an object

Checking if a user and object have a relationship

Pre-retrieval Access Control

Read operation 1 enables pre-retrieval access control. Getting allowed objects for a user allows you to first query the permissions graph for object IDs (this could be file, page, record IDs depending on the integration data source). Then use the list of object IDs to construct a metadata filter when querying your RAG database.

This is called pre-retrieval access control as you are first filtering permitted objects before vector retrieval from the vector database.

Post-retrieval Access Control

Post-retrieval access control enforces permissions after vector embeddings are retrieved from your vector database. In your application backend, you would then use read operation 2 or 3 to check if the object IDs associated with vectors retrieved from the vector database are allowed.

Implementation with Permissions API

To take advantage of the performance and flexibility benefits of a permissions graph and start implementing pre or post-retrieval access control, we usually would have to go through the delicate exercise of properly defining our schema and relationships.

However, Paragon offers a fully-managed permissions graph as part of Managed Sync, a service that handles the infrastructure around data ingestion pipelines and permissions for 3rd-party integrations.

In our last tutorial, we used Managed Sync’s Sync API to ingest Google Drive and Salesforce data. When data is synced with the Sync API, a managed permissions graph is automatically spun up and maintained on Paragon’s infrastructure. We could then use the Permissions API to query the managed permissions graph with our synced data directly.

This means that we don’t need to worry about carefully defining schemas and relationships for different integration providers OR building permission change infrastructure. We can just use the different Permissions API endpoints to implement either pre or post-retrieval access control.

Check out the docs for more detail on each of these API methods.

Permissions API - Batch Checking

The one we’ve implemented for our tutorial app, YourApp.ai is the batch-check endpoint. This endpoint allows us to pass in an array of user-object relationships to check permissions of multiple 3rd-party objects with one API call.

From there, we filtered out the chunks based on whether or not the object passed the check!

We recommend the batch-check endpoint for checking data post-retrieval - where we filter out permitted data after retrieving relevant chunks from the vector database (see below).

Permissions API - List Objects

The list-objects endpoint can be used for pre-retrieval filtering.

Rather than retrieve from the vector database AND THEN reconciling permissions, pre-retrieval filtering takes advantage of the metadata filtering function of your vector database to retrieve permitted chunks only. This puts the data filtering operations in your database layer - optimized for filtering and data operations - rather than in your backend services.

An additional advantage of pre-retrieval filtering is that you can cache the list-objects result to reduce the number of Permissions API calls. The permitted objects returned from list-objects can be used as a vector database filter for the duration of a user session or a specified TTL (time-to-live).

Pinecone supports metadata filtering with operators for arrays. In this example, after retrieving a list of objects using the list-objects endpoint, we can pass in the array when retrieving records from Pinecone.

In general, we recommend using the batch-check endpoint for enforcing permissions and access control, because it only takes one API call to check access to multiple data assets and scales without need for pagination as the number of files/objects increases.

Permissions API - List Users

Another way to enforce permissions/surface permissions is via the list-users endpoint. This endpoint can be used for post-retrieval checks, but where we recommend usage is for admins in verifying access to data assets. In our YourApp.ai site, we implemented this admin view to see synced files and check for permitted users.

Wrapping Up

In this deep-dive/tutorial we went over:

Why permissions for external context is difficult

Four different permissions protocols to properly enforce permissions of RAG retrieved data

How to implement access control using Permissions API - a managed solution for permissions

Our recommendation for most use cases using the permissions graph protocol with batch checking for post-retrieval access control

We covered everything you probably ever needed to know and more about RAG permissions in this tutorial! Stay tuned for more content in this tutorial series where we’ll be covering tool calling, workflows and more!

CHAPTERS

TABLE OF CONTENTS

Table of contents will appear here.

Jack Mu

,

Developer Advocate

mins to read