Knowledge AI Chatbot

Building an AI Knowledge Chatbot with Multiple Data Integrations

How we built an AI chatbot that enables users to connect their Google Drive, Notion, and/or Slack accounts and ingest and retrieve relevant context from those apps to answer queries.

If you are building AI features for your SaaS product, building an AI knowledge chatbot using data you have in your application is often the first step. But it's just the tip of the iceberg. For non-surface-level use cases, enabling integrations through a Retrieval Augmented Generation (RAG) process opens the door to using external data sources for context-specific responses from your AI model. Integrating with your customers’ data will enhance your chatbot’s usefulness and provide a more tailored experience.

Tutorial Overview

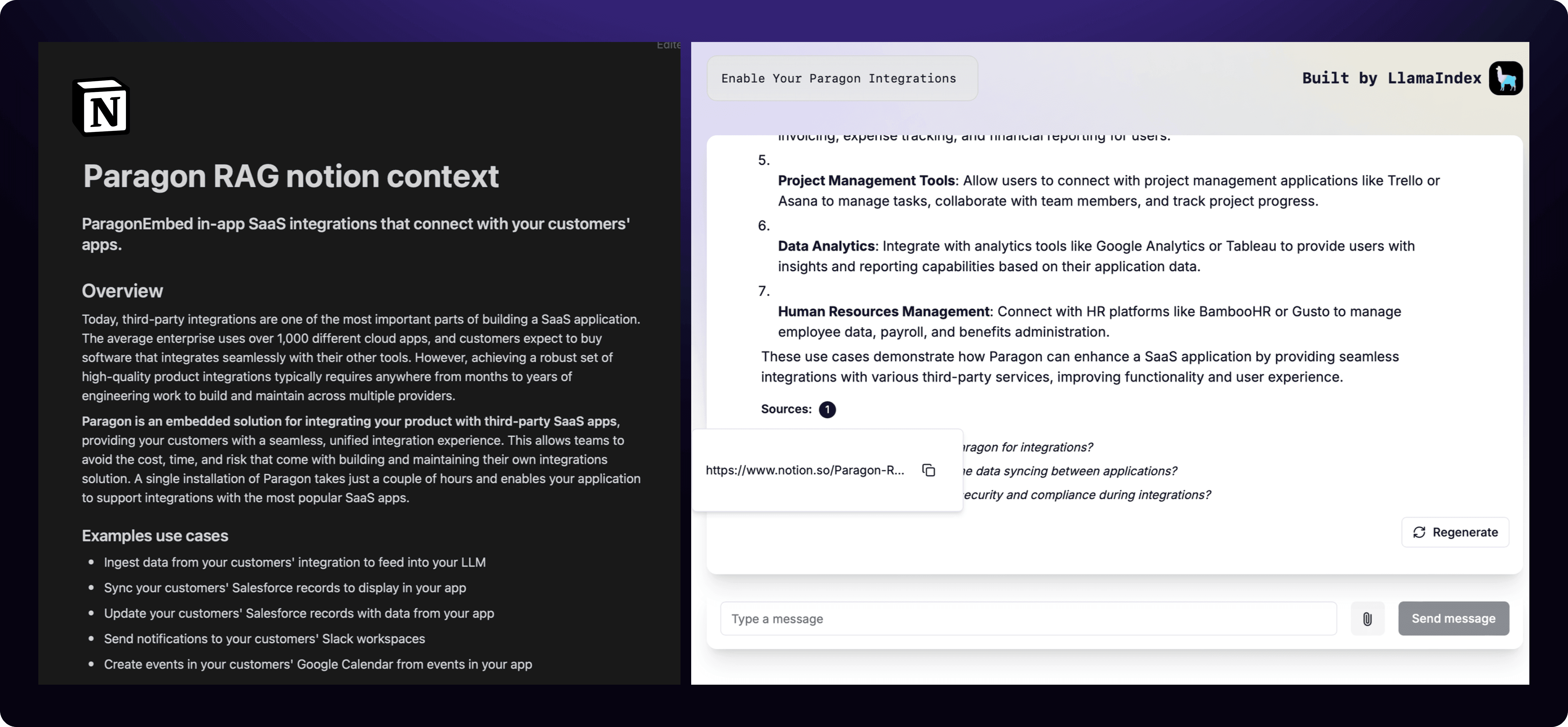

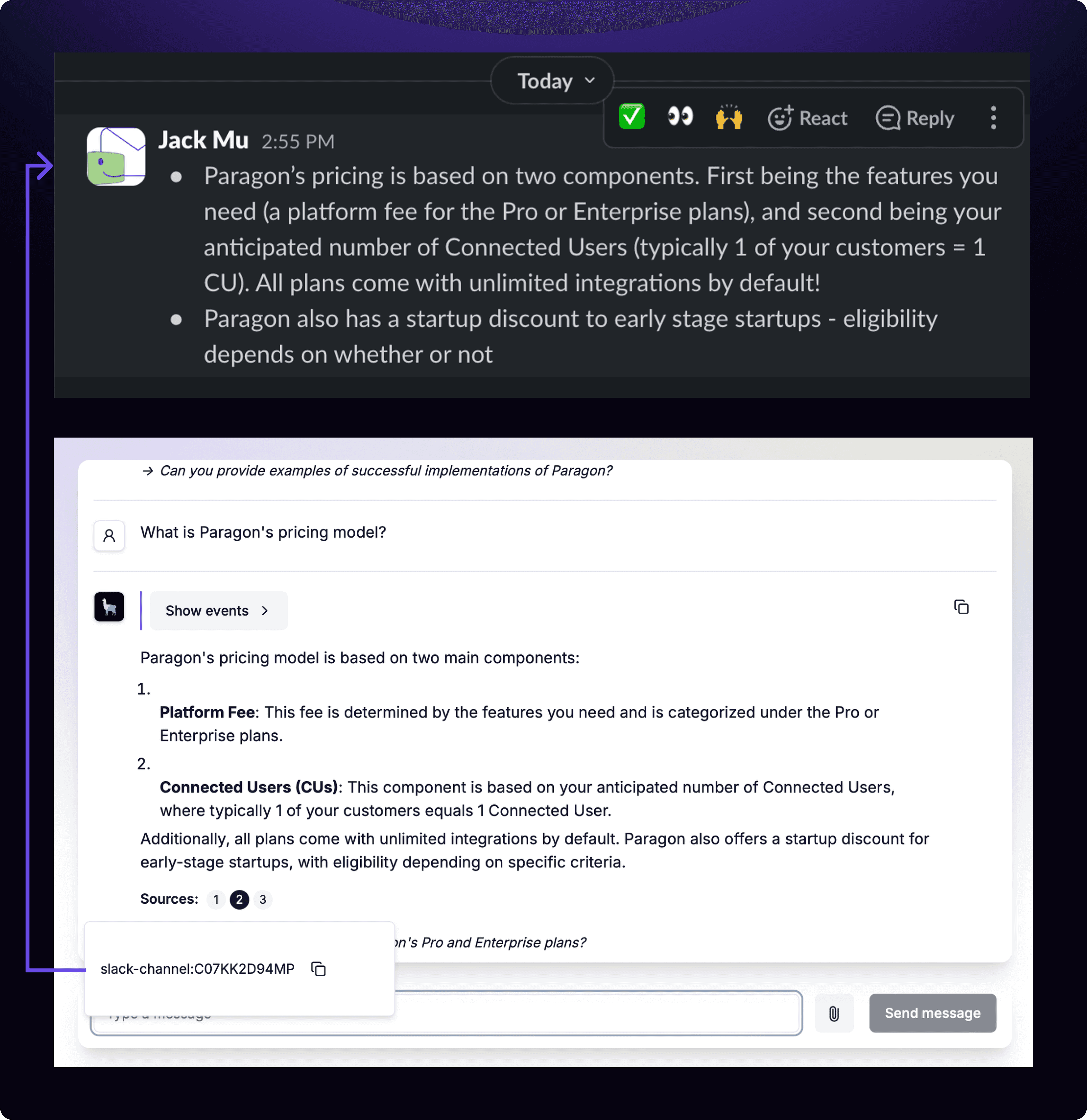

In this article (and video), we will walk through how we built an AI chatbot that ingests contextual data from three platforms: Slack, Google Drive, and Notion. Our AI chatbot - we’ll call it Parato - needs to be able to answer questions with data provided from messages in its users’ Slack, files in their Google Drive, and pages in their Notion.

Parato not only answers questions with external data from these sources, it ingests from these data sources in real time (i.e. when your Notion page is updated, Parato can immediately use this new document within a minute) and can source which external document was used in the response.

Video Walkthrough

In this quick video, we walk through a demo of the AI chatbot, our architecture, as well as the implementation we took to making this all happen.

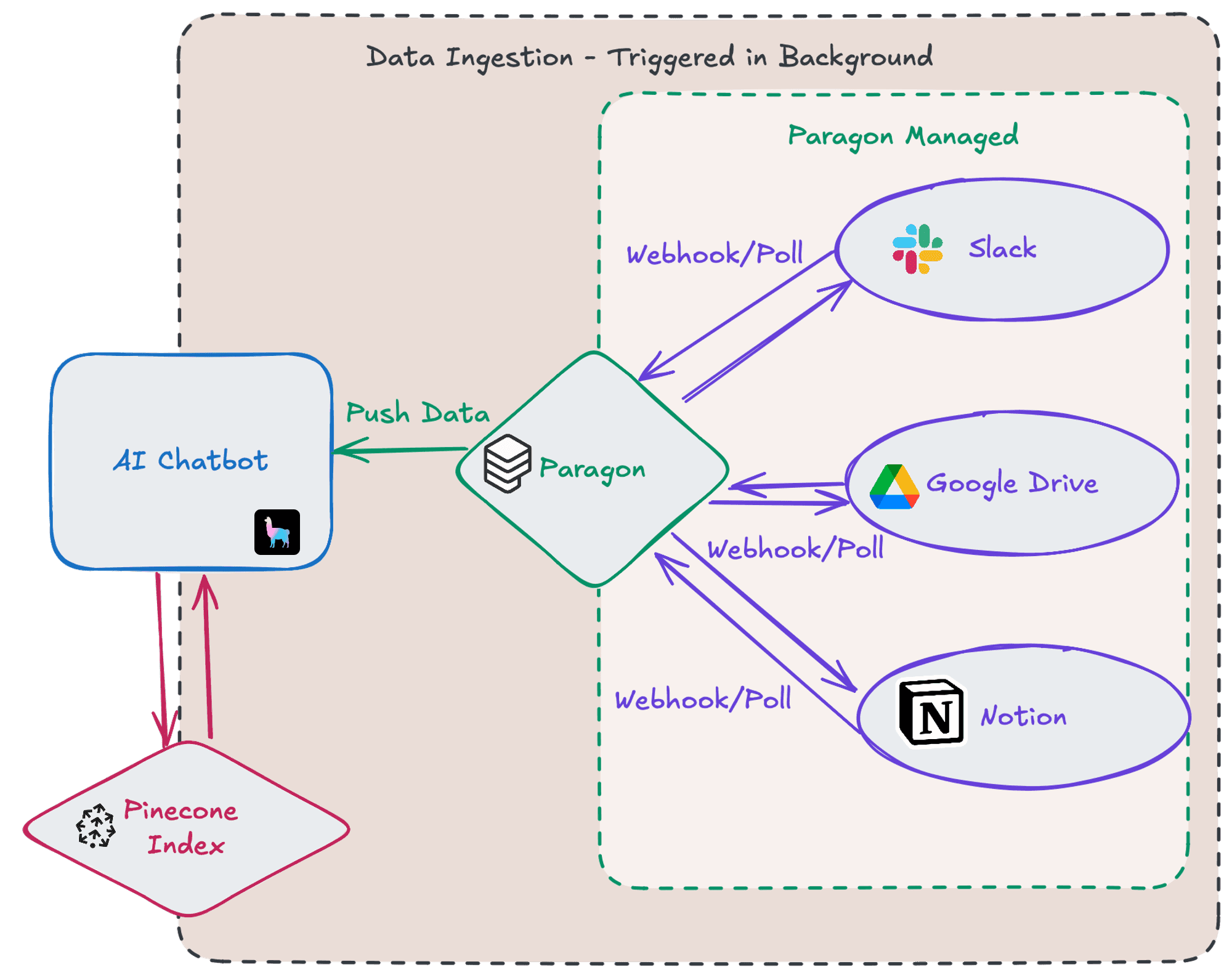

RAG Enabled Chatbot Architecture

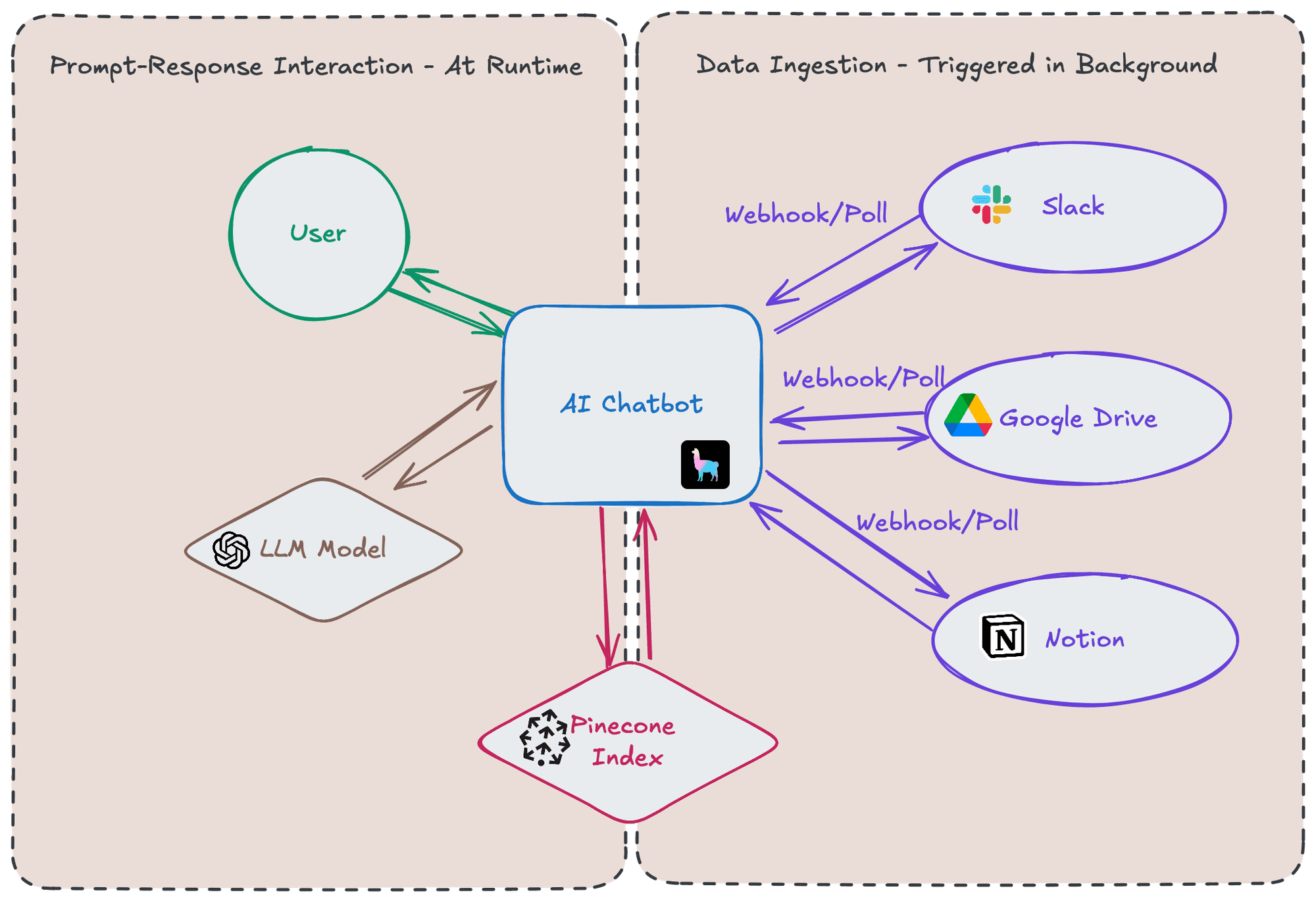

An AI chatbot generally has three components - the chatbot application itself (for our purposes, the application includes the frontend and backend), an LLM model powering the chatbot, and a database (usually a vector database) for contextual data. In our case, our chatbot application also has to work with a fourth component - third party data. Parato has to be able to receive data reliably, parse and chunk the data, and insert it into our vector database for use when prompted.

This ingestion of data involves interacting with third party API’s to poll for new data or to set up webhooks to receive data from them. This comes with its own set of challenges including staying up-to-date with third party APIs, authentication/authorization, infrastructure to handle the data, and monitoring. You can read more about the challenges with integrating your data intensive application in detail here.

Meet Parato

Building the Chatbot

To get started building Parato, we used LlamaIndex’s create-llama npm tool. The create-llama command line tool generates a NextJS frontend and backend that has built in integrations with OpenAI’s models and Pinecone’s vector database. NextJS gives us a full stack framework that’s relatively easy to deploy via Vercel, and the integrations with OpenAI and Pinecone allow us to work with technologies that are industry standard. To follow along and for more documentation on create-llama visit their site here.

To highlight one of create-llama's out of the box features, the generated NextJS application code has pipelines for transforming documents to vectors, chunking them, and loading to vector stores.

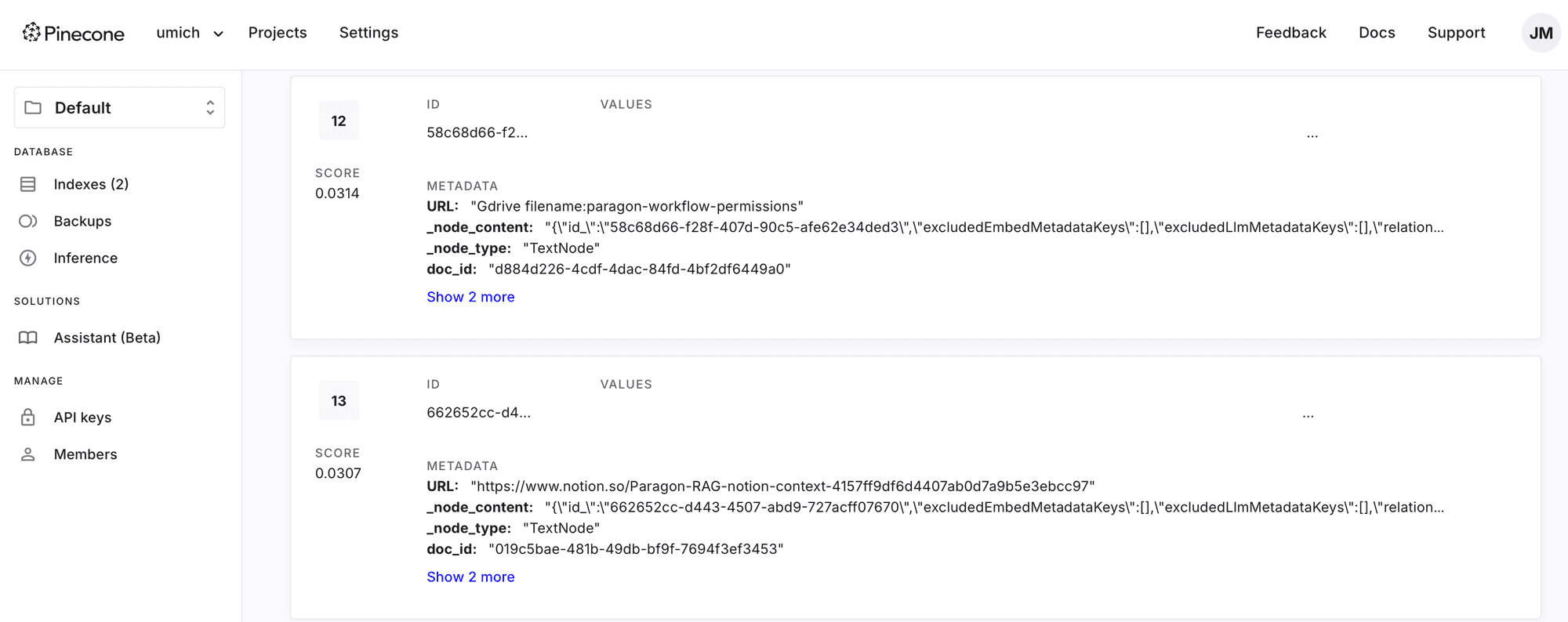

As for custom features that are not out of the box, we added a few endpoints that can receive text data, add custom metadata (such as the URL or file name of the data source), and run create-llama’s processing pipelines (pipeline actions include vector transforming, chunking, and loading into our Pinecone vector database). These custom endpoints are the access points our data ingestion engine sends data for vectorization and storage.

After we provided our OpenAI API key and Pinecone configurations, we then had a working chatbot with an OpenAI LLM and Pinecone vector database. Next, we needed to build the data ingestion process to send data to our endpoints, giving our RAG enabled application to new data.

Building the Data Ingestion Engine

As mentioned in the Architecture section, there are many challenges that come with interacting with third party API’s and webhooks. Although these mechanisms can be built in-house, this tutorial will showcase how we used Paragon to orchestrate the ingestion jobs. Paragon handles many of the underlying challenges like authentication/authorization, load balancing, and scaling behind the scenes.

Data Ingestion from API’s and webhooks fall under two categories for our chatbot Parato. First we built a pipeline for user triggered events, allowing users to pull data from a third party like Slack when they need to. A good example of when this may be needed is when a customer first connects their Slack account to your application. Your application will need to pull all the Slack message history it has access to and ingest the data.

Second, we built a pipeline for webhook triggered events, allowing for real-time events sent by third parties to immediately be received, ingested, and accessible to our chatbot. Building off the previous example, after your customer connects their Slack account, Slack will send webhook events whenever a new message is posted. Your application needs to be able to receive these messages at scale in the data ingestion process.

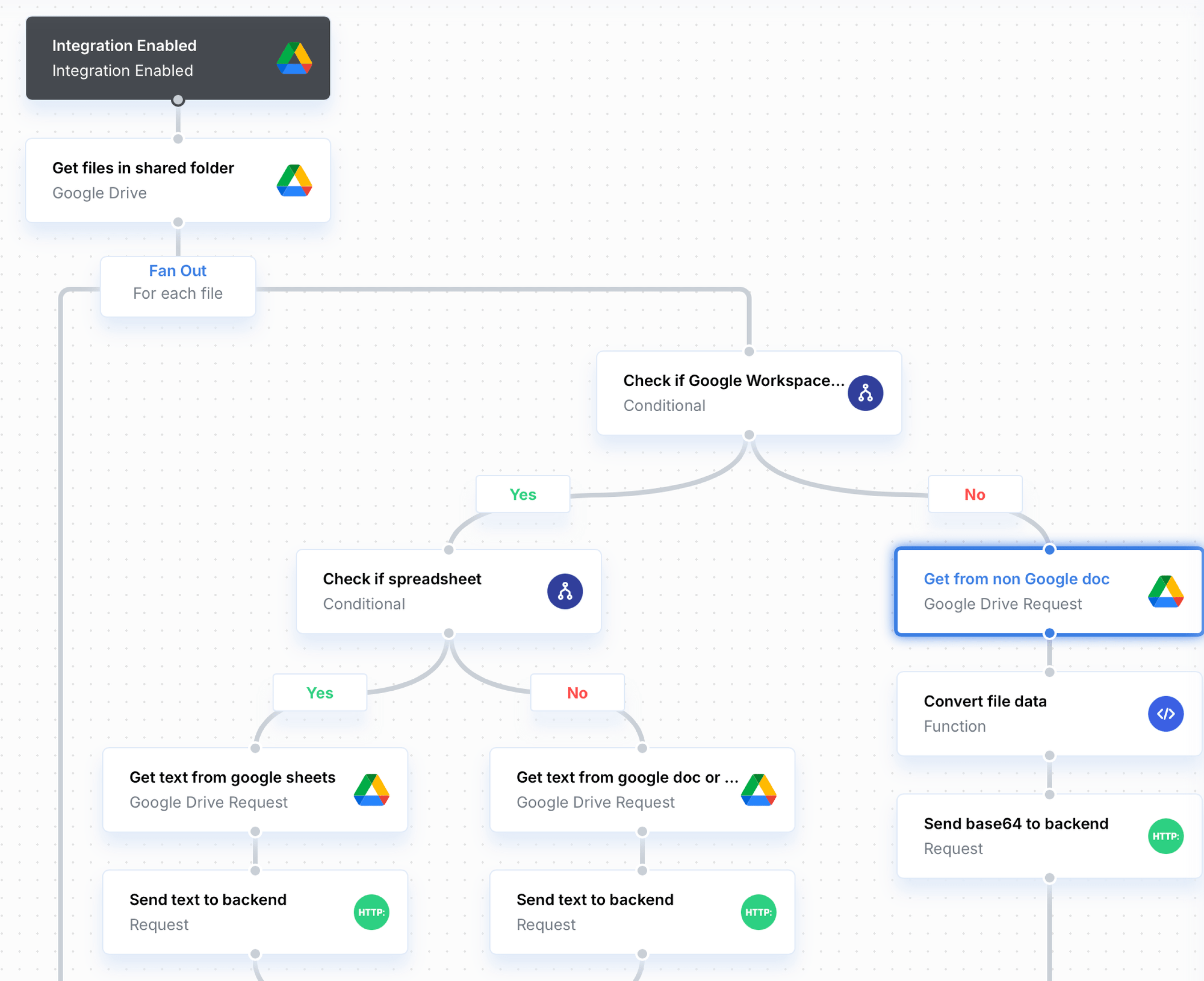

User triggered events

Our data ingestion pipelines were built with Paragon. In the workflow builder, we built a process that involves steps using Google Drive’s API as well as custom logic like conditionals, “fan outs” aka for loops, javascript functions for parsing and transformations, and http requests for sending data to our backend.

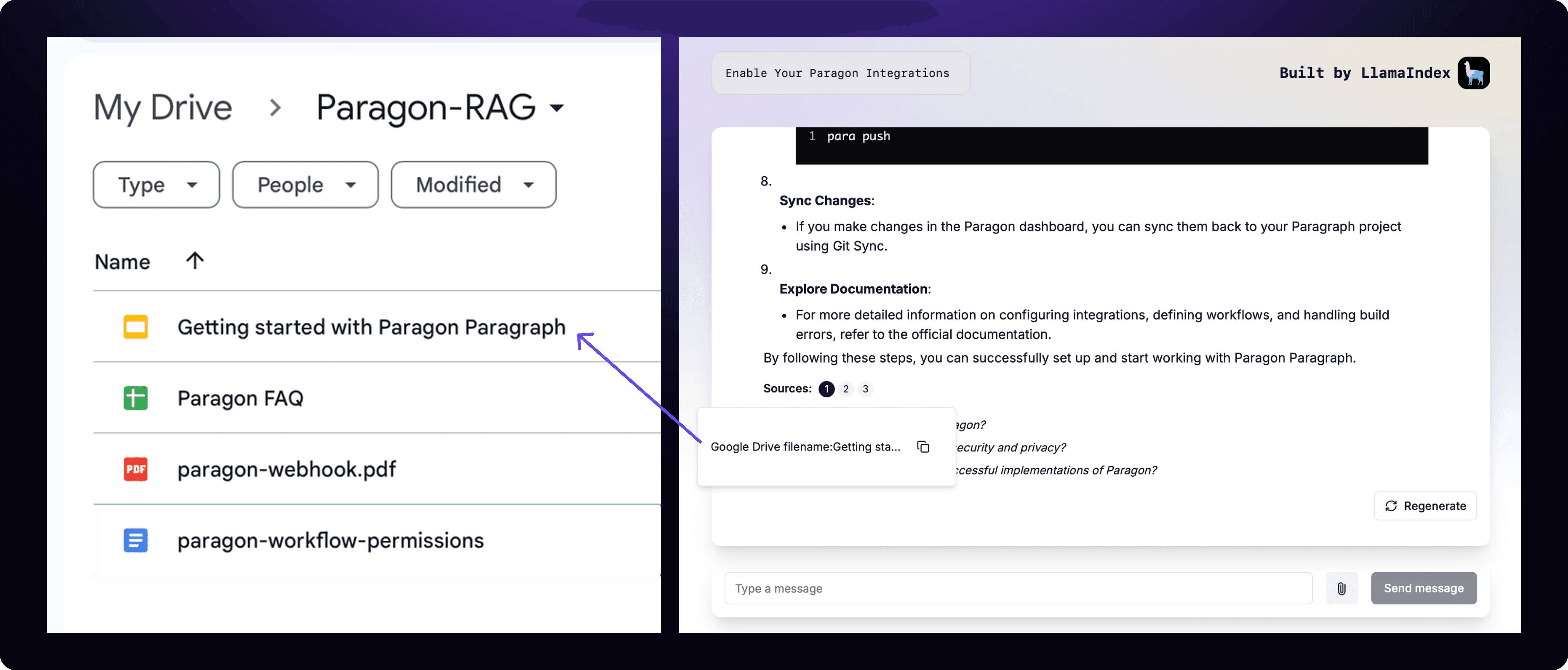

With this workflow, Parato is able to get all files in a Google Drive folder, perform data transformations for each file depending on the type (Google doc vs CSV vs PDF etc.), and send that data in a POST request to our chatbot application’s custom endpoints.

After triggering this workflow, which occurs when a user enables their Google Drive integration, every existing file in their Google Drive Folder gets sent to the vector database, which makes it available for the chatbot application to use.

Webhook triggered events

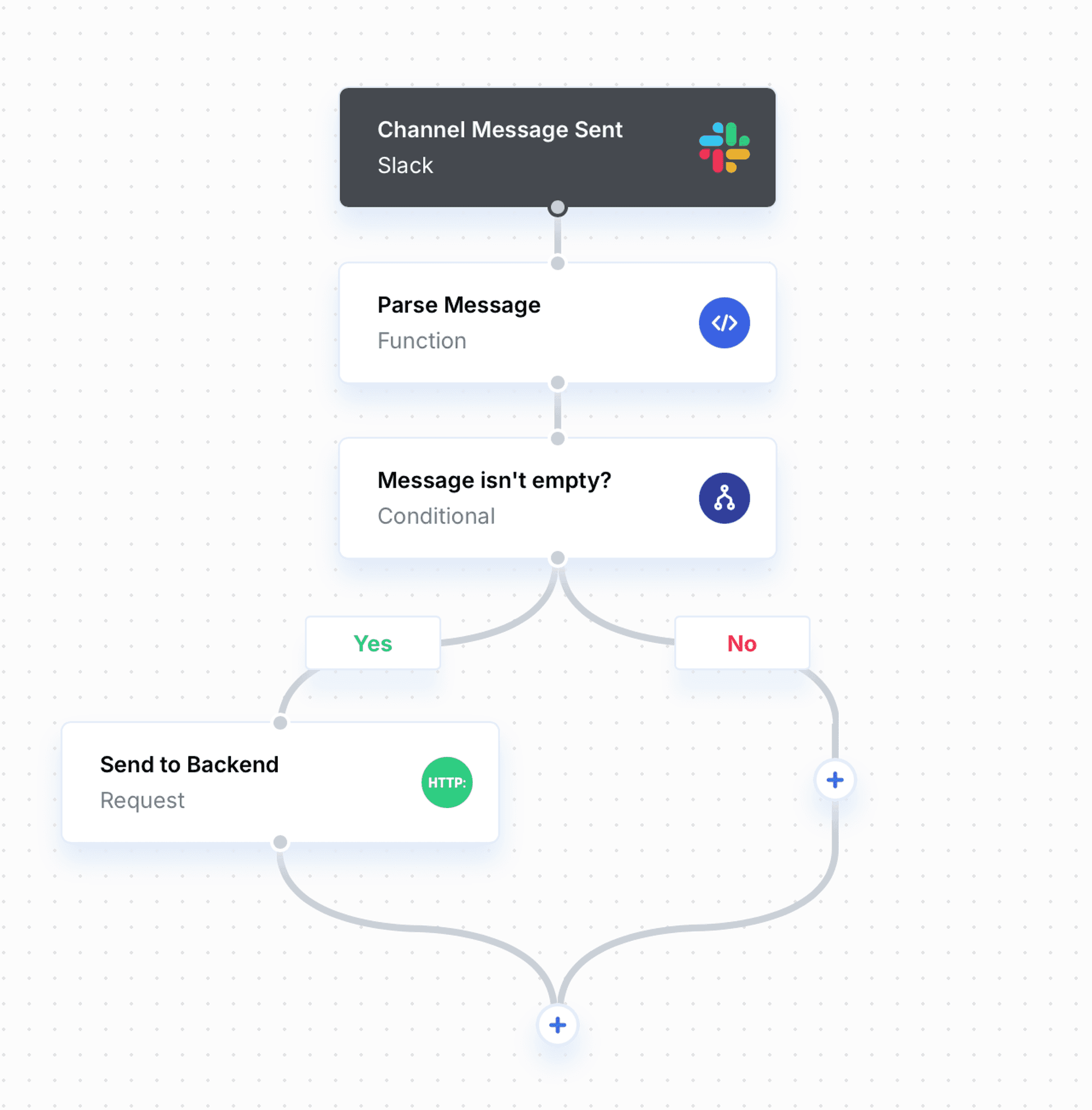

Rather than have Slack send their messages directly to our application, we built our RAG enabled chatbot backend on top of Paragon’s engine as it handles authentication tokens, queues to store messages, monitoring, and other features. In this Paragon workflow, we took advantage of a Paragon supported webhook trigger (Channel Message Sent), meaning Paragon handles the webhook listening so we can focus on parsing and sending the message data.

This workflow triggers whenever a new Slack message is sent in the channel we gave our application access to. The message is parsed and sent to our chatbot application to be vector transformed and stored in Pinecone.

As a result, Parato now has access to historical documents like all the files in a Google Drive, as well as real-time data like Slack messages to source answers from.

Wrapping Up

This tutorial should have shed some light on how a RAG enabled chatbot is built and how multiple third-party data sources can be integrated to the RAG process. Integration with these third parties and reliable data ingestion can be challenging, but they ultimately make your application even more powerful for your customers as they can access the data your customers access.

Just as how AI applications can utilize OpenAI’s models for their LLM, applications that rely on multiple data sources like RAG can take advantage of Paragon for third-party integration and data ingestion.

CHAPTERS

TABLE OF CONTENTS

Jack Mu

,

Developer Advocate

mins to read