Tutorials

Guide to Agent Harnesses: Building, Measuring, and Improving Your Agent

The agent harness is a key component for designing agents. Give your agent the tools and environment it needs to revolutionize your users' workflows.

Agent Harnesses: Building, Measuring, and Improving your Agent's Performance

MCP, skills, subagents, tools, context engineering, agent orchestration. New concepts for building agents burst onto the scene seemingly every day. It can be overwhelming for teams building agents today to answer the question: Which of these implementations will actually measurably improve your agent?

The fact is, different LLMs behave differently to different prompts and tools. Improving and optimizing your agent really means tuning its harness - the different tools, prompts, and context - all along with the LLM to perform tasks.

In this guide on harnesses, we'll tackle:

What is an "agent harness" and how does it impact performance?

How do you measure an agent’s performance in a harness?

How can you use evaluations to optimize your agent?

What is an Agent Harness?

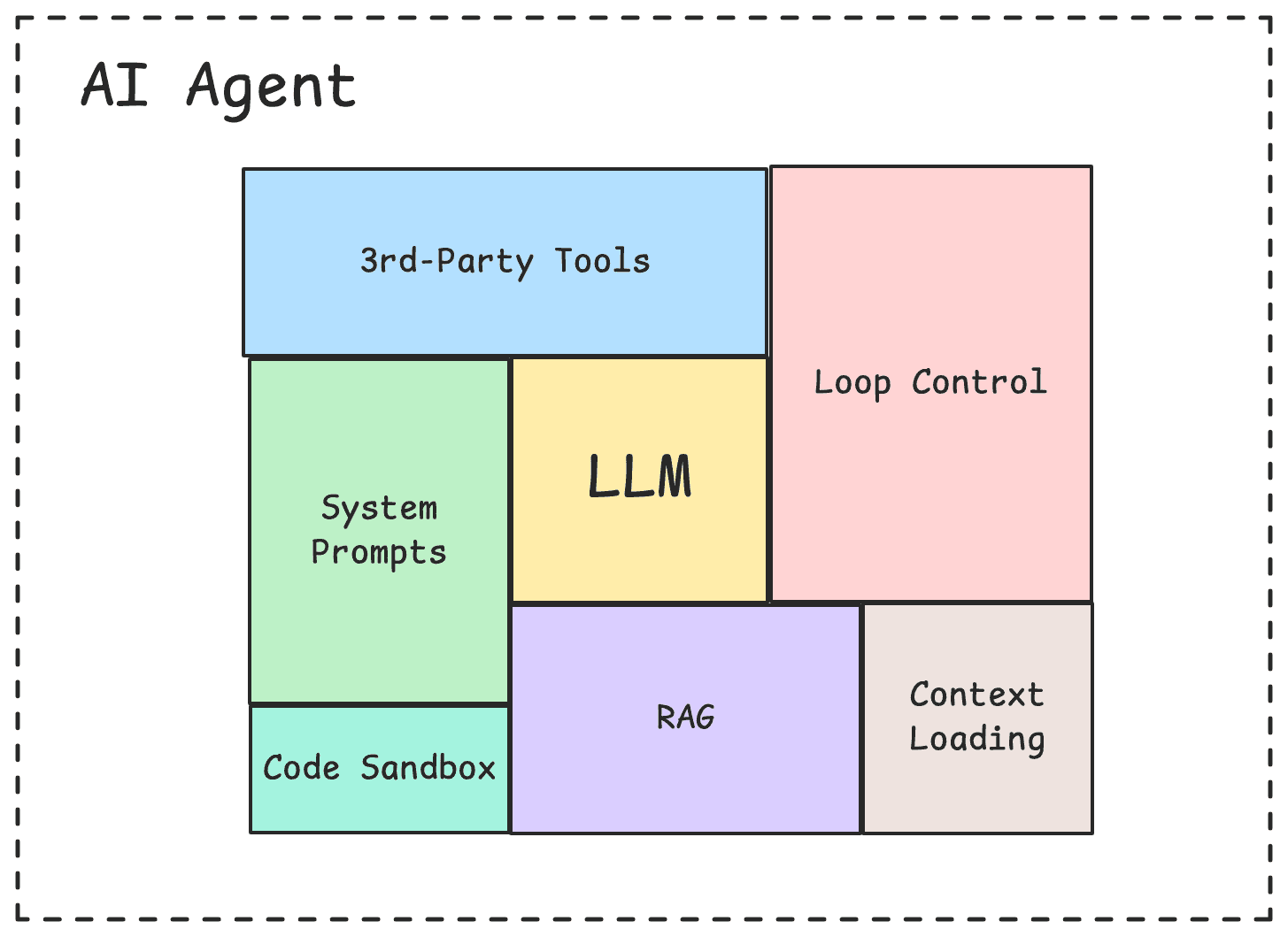

An agent harness is all the context and capabilities you surround an LLM with; think prompts, files, loops, and tools, just to name a few.

Here's a simple example:

A Notion agent is tasked with helping users search their Notion for context relevant to their question. A simple agent harness can consist of:

The system prompt describing how an agent should behave

Loop control

Tools like

search-notion,get-page-contents,get-block

In this example, we are essentially modifying the base LLM's behavior by giving it a system prompt and Notion tools. Tool providers like ActionKit have pre-built tools for 3rd-party actions in Notion, Slack, Google Drive, and Jira, making it easier to wire up harnesses for agents that need to integrate with other platforms.

Other examples of harness components could be a planning tool to decompose a task into smaller sub-tasks, subagents to handle specialized small tasks, and a sandbox to execute code.

Configuring a harness can be difficult to get right because of all the moving pieces, but it's the surest way of building agents for specialized tasks, not just a generic agent that behaves like the underlying LLM. In order to get the harness "right" and build specialized agents, we have to start with how to measure an agent.

How to Measure Agent Performance

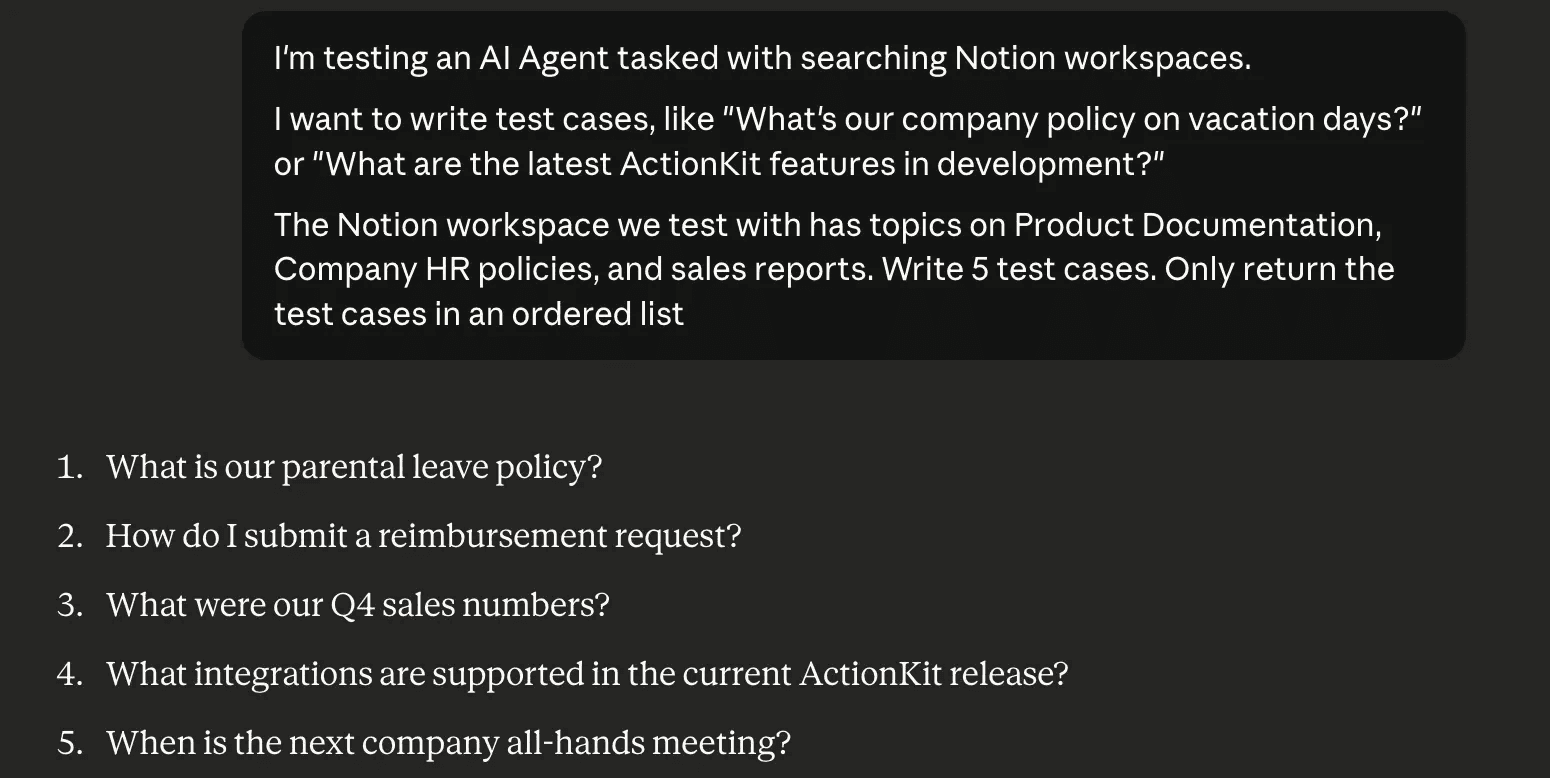

When building an agent, you should have a category (or categories) of tasks that your agent is built to tackle. The more specific you make these tasks, the easier it will be to measure an agent's performance on that task. Even with a category like "enterprise knowledge," this can be broken down into smaller categories of tasks like "searching Google Drive files," "searching Notion workspaces," and "searching relevant Slack threads." Your task categories help you write test cases that you can evaluate your agent on.

Task Category | Prompt |

|---|---|

Search Page Content | Any car maintenance I should do in 2026 |

Ideation | Based off the "LA" pages, what other activities and places should I check out |

Title Search | How many foods are in the "foods" page |

While there are different definitions of "agents," the terms that show up in most definitions are “tool calling”, “loops”, and “completing tasks on behalf of users”. "Completing tasks for users" is essentially the end goal, and "tool calling" to perform actions and "loops" to iterate are how an agent gets there. Measuring agents means measuring each part of what makes an agent an agent. We can measure:

Tool Correctness: Did the agent select the right tools?

Tool Usage: Did the agent use the tools correctly with the right inputs?

Task Completion: Did the agent complete its tasks given the tools and loop iterations?

Task Efficiency: How efficiently did the agent complete its task? Did it take multiple loop iterations? How many tokens?

Let's talk about how to calculate each metric.

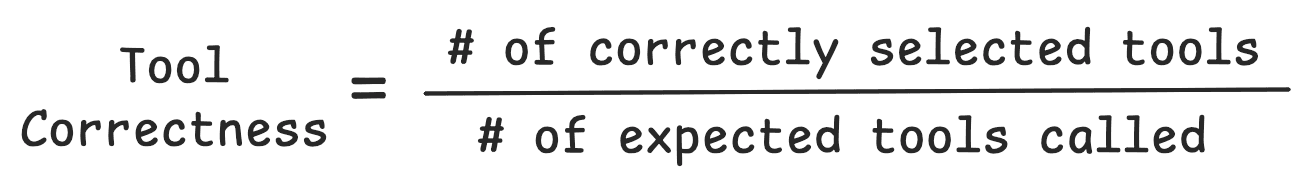

Tool Correctness

Tool correctness is the most objective metric out of the four. Calculating tool correctness is a straightforward metric that measures what percentage of expected tools your agent successfully called.

Tool Usage

Tool usage compares the tool inputs to the original prompt. In other words, tool usage measures how well an agent is able to extract the correct inputs into a tool.

No matter how well-tested the tool's underlying logic is, your agent will never be able to execute the code if it can't properly input your function's parameters.

Tool usage can be measured with an LLM-as-a-judge comparing the prompt to tool inputs. Depending on your tools, you can also measure tool usage programmatically if your expected tool inputs are extremely predictable.

If you notice your tool usage metrics vary widely, it may signal that your tools need a redesign. Consider reducing the number of inputs, refining input descriptions, identifying easily extractable inputs, and rethinking the tool's function to handle dynamic inputs.

Examples of well-designed tools for agents include tools that are well documented, like bash and SQL or low-input tools that don't require many brittle inputs.

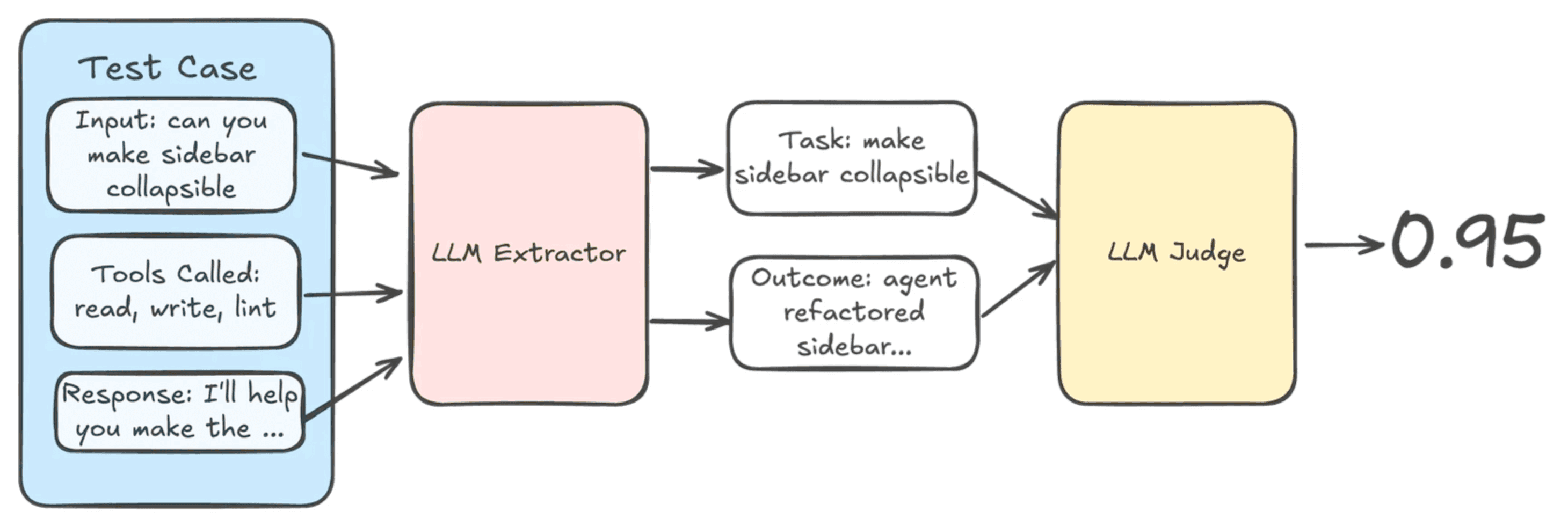

Task Completion

Task completion compares the final output against the original prompt to measure if the original task was completed.

Generally this is done with an LLM-as-a-judge. However, just like tool usage, if your agent has strict outputs, like structured outputs or specific tool calls, then task completion can be calculated programmatically.

Task Efficiency

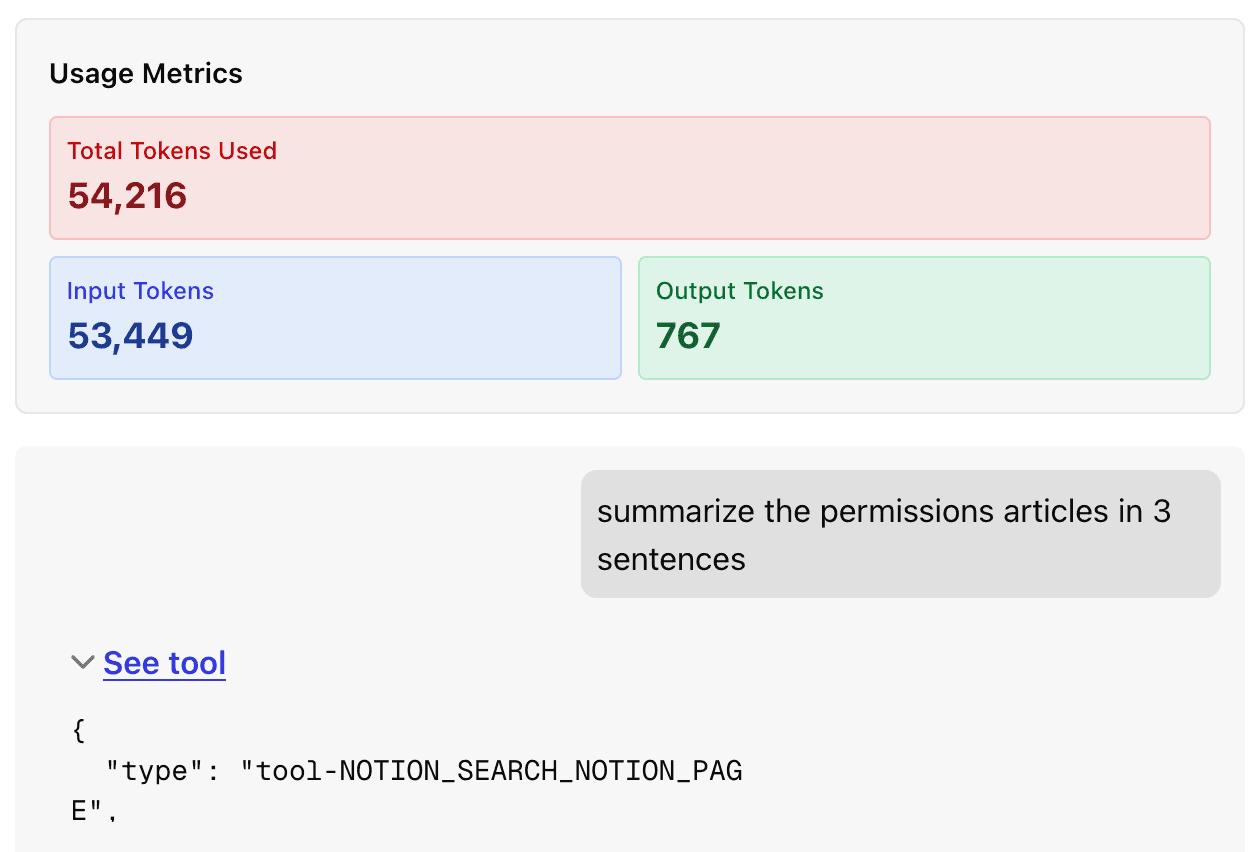

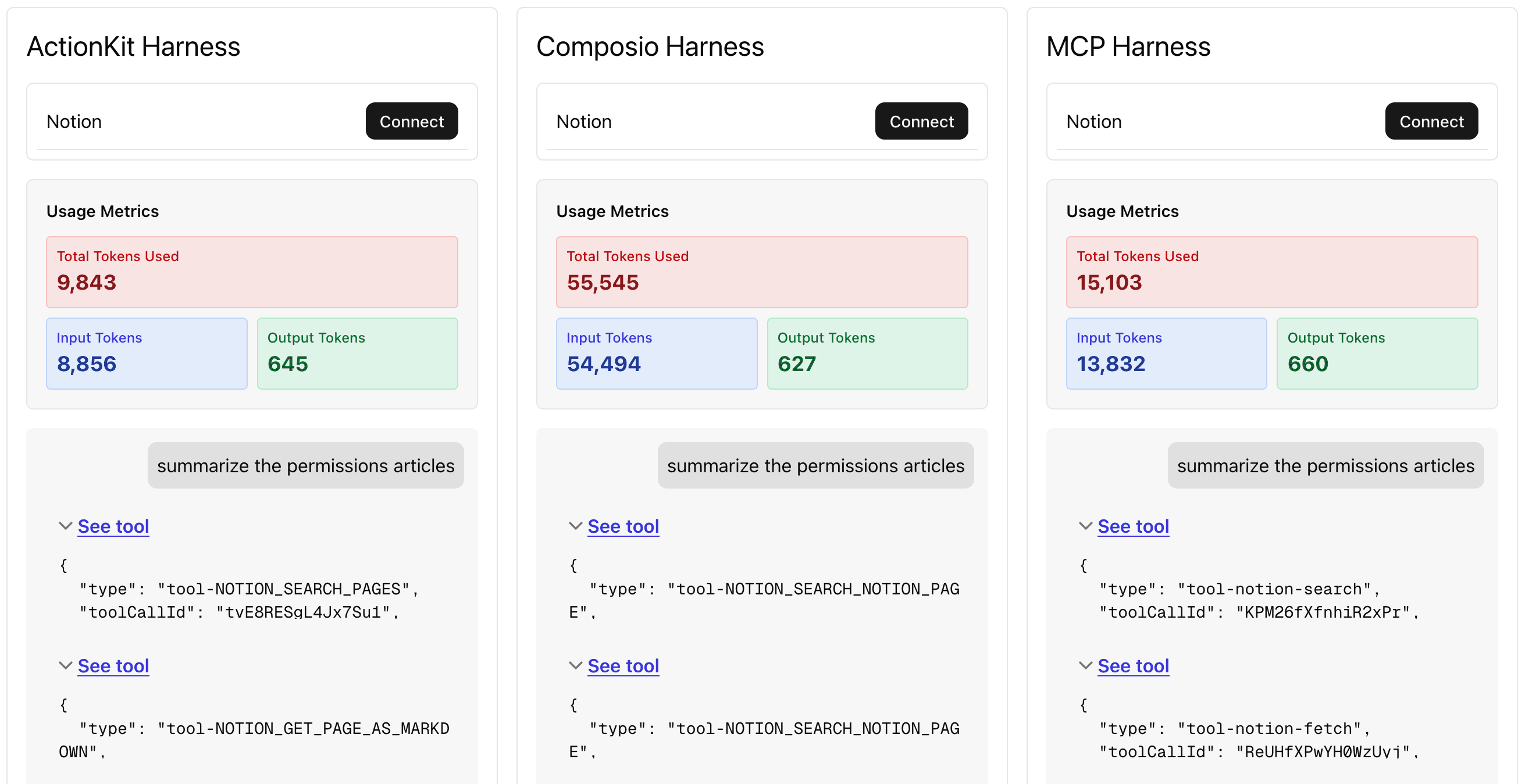

Task efficiency measures the LLM usage it takes to complete a task. Token usage is a metric if cost is a consideration to your product team. Tools use input tokens, and different models like Anthropic's models have high prices for these input tokens. Bloated tool loading can cause even simple tasks to become quite expensive.

Turns is another metric where latency is a consideration. Turns is how many loop iterations the agent took. The more turns, the longer it takes an agent to respond. Reasoning is an example of looping where users can notice a significant increase in wait times.

For asynchronous agent workflows, this may not be as important. For chat and support agents, latency becomes important, as every second of wait time increases the probability users will drop off.

How to Continuously Evaluate and Improve Agent Performance

Connecting agent evaluations back to harnesses, continuous agent improvement means building evaluations to iterate and improve on your agent's harness. Let's break this down into steps and walk through an example of a Notion RAG agent with Notion tools from the ActionKit API.

1. Building Your Test Suite

You know your problem space best. You know your product best.

So while it may be tempting to have AI write your test cases, the best evaluations start with manual test cases. This means writing prompts you expect users to ask. Even better, use real examples if you have them.

From there, you can use AI to "fan out" your manually written prompts to create variations of the prompts you've written. I actually picked up this trick from AEO platforms that use "fan-outs" to create related prompts to the topic you're trying to optimize for LLM visibility.

2. Use (or Build) an Evaluation Framework

Popular evaluation frameworks include Arize, Maxim, and Confident AI. I've had positive experiences with DeepEval from Confident AI, using their open-source Python library to evaluate tool correctness and task completion.

I also recommend giving DeepEval's docs a read. There are excellent guides that we've used to inform our research on optimizing tool calling and optimizing RAG retrieval.

I included the option to build your own evaluation framework because there are times you may want to build your own "primitives" to evaluate agent performance.

We actually went through this process ourselves in an exercise we did for our tool provider product, ActionKit. We built our own framework for evaluating agent performance against other tool providers like MCP servers, comparing tool correctness, tool usage, task completion, and task efficiency against other tool providers to hone ActionKit's tools.

The 4 metrics (tool correctness, tool usage, task completion, task efficiency) are generally useful and a good starting point. But don't be afraid to be creative and build your own metrics and framework. If your agent heavily uses a graph/workflow runtime, you may want your evaluation framework to measure graph metrics like if your agent is hitting all the expected nodes for a given task. Metrics like these may be custom to your agent implementation, requiring a custom-built evaluation framework.

Reiterating this point because it's worth repeating, "You know your problem space best. You know your product best."

3. Identify Problem Test Cases and Iterate

This is the action step. Test cases and evaluation frameworks identify your agent's weaknesses.

Like an athlete training for a sport, an agent will have its unique weaknesses. Training and honing in on those weaknesses are how your agent gets better. Adjust your agent's harness - the system prompt, tools, and environment to address its weaknesses without regression.

Using our Notion RAG agent as an example, we noticed that gpt-5 was the model that best fit in our ActionKit-provided harness. Of the gpt-5 test cases where the task was not completed, half of them were because a tool call was not attempted, and the other half was from failed tool usage of the search tool.

Prompt | Tools Called | Tool Usage |

|---|---|---|

What are some goals I can make in 2026 | ||

What are the key components of the RAG tech stack | ||

What should I make for dinner? | NOTION_SEARCH_PAGES | "input": {"query": "dinner", "objectType": "page", "direction": "descending", "pageSize": 10}, |

When did I get my teeth cleaned last? | NOTION_SEARCH_PAGES | "input": { "query": "dental", "objectType": "database", "direction": "descending", "pageSize": 50}, |

To improve our harness, we can better emphasize that the search tool MUST be used and provide examples of search queries in our system prompt. We may also look at including a semantic search tool to better search queries for users without specific keywords in mind.

Wrapping Up

Optimized agents are a culmination of every part of an agent coming together. The model, the environment, the runtime, the prompts, and maybe most importantly, the tools.

The path you'll take to optimize your agent and its harness isn't a one-click fix. It takes evaluating and iterating on your agent's harness. And it's how you'll unlock capabilities even a frontier LLM cannot achieve alone.

If you're looking to build the best agents with 3rd-party tools and integrations, take a look at ActionKit for hundreds of 3rd-party tools your agent may benefit from in its harness. Try out Paragon for free and book a call with our team for a demo of the platform and to answer any questions you may have. We'd love to learn more about what you're building and help you build the best agents in the SaaS space.

TABLE OF CONTENTS

Jack Mu

,

Developer Advocate

mins to read